(Hands-On) Hyperparameter Tuning

1. Open the notebook

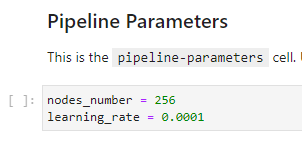

Go back to the Notebooks in your Kubeflow UI, and open the notebook named dog-breed-katib.ipynb. You are going to run some hyperparameter tuning experiments on the ResNet-50 model, using Katib. Notice that you have one cell in the beginning of the notebook to declare parameters:

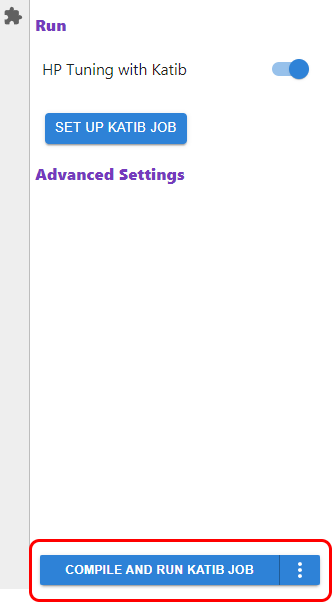

2. Run the hyperparameter tuning

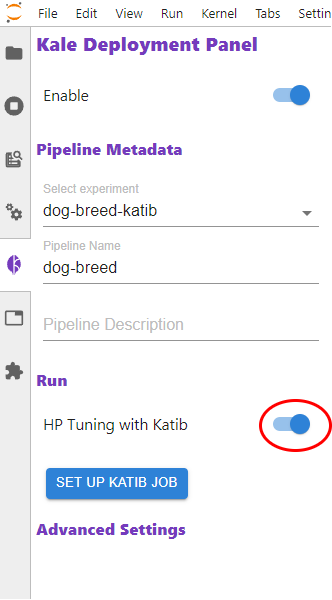

In the left pane of the notebook, enable HP Tuning with Katib to run hyperparameter tuning:

3. Configure Katib:

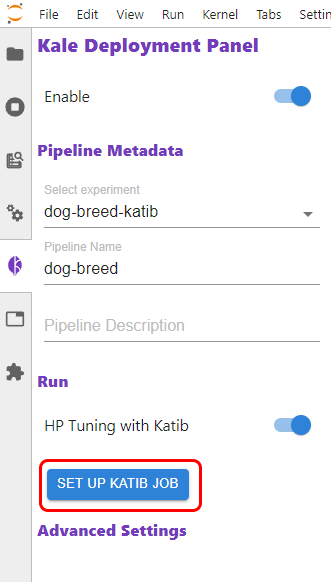

Then click on SET UP KATIB JOB to configure Katib:

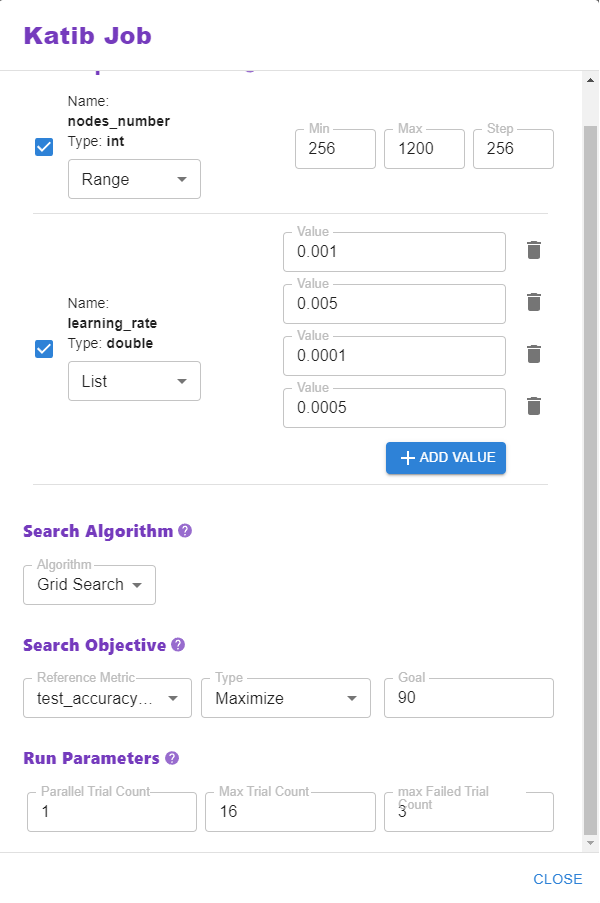

Kale auto-detects the HP tuning parameters and their type from the notebook, due to the way we defined the parameters cell in the notebook. Define the search space for each parameter, and define a goal:

4. Compile and run Katib job

Click the COMPILE AND RUN KATIB JOB button:

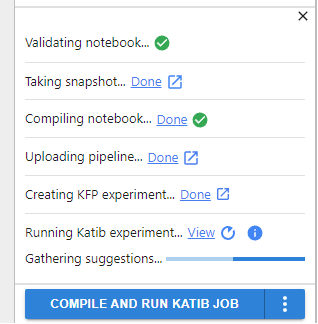

5. Watch the progress

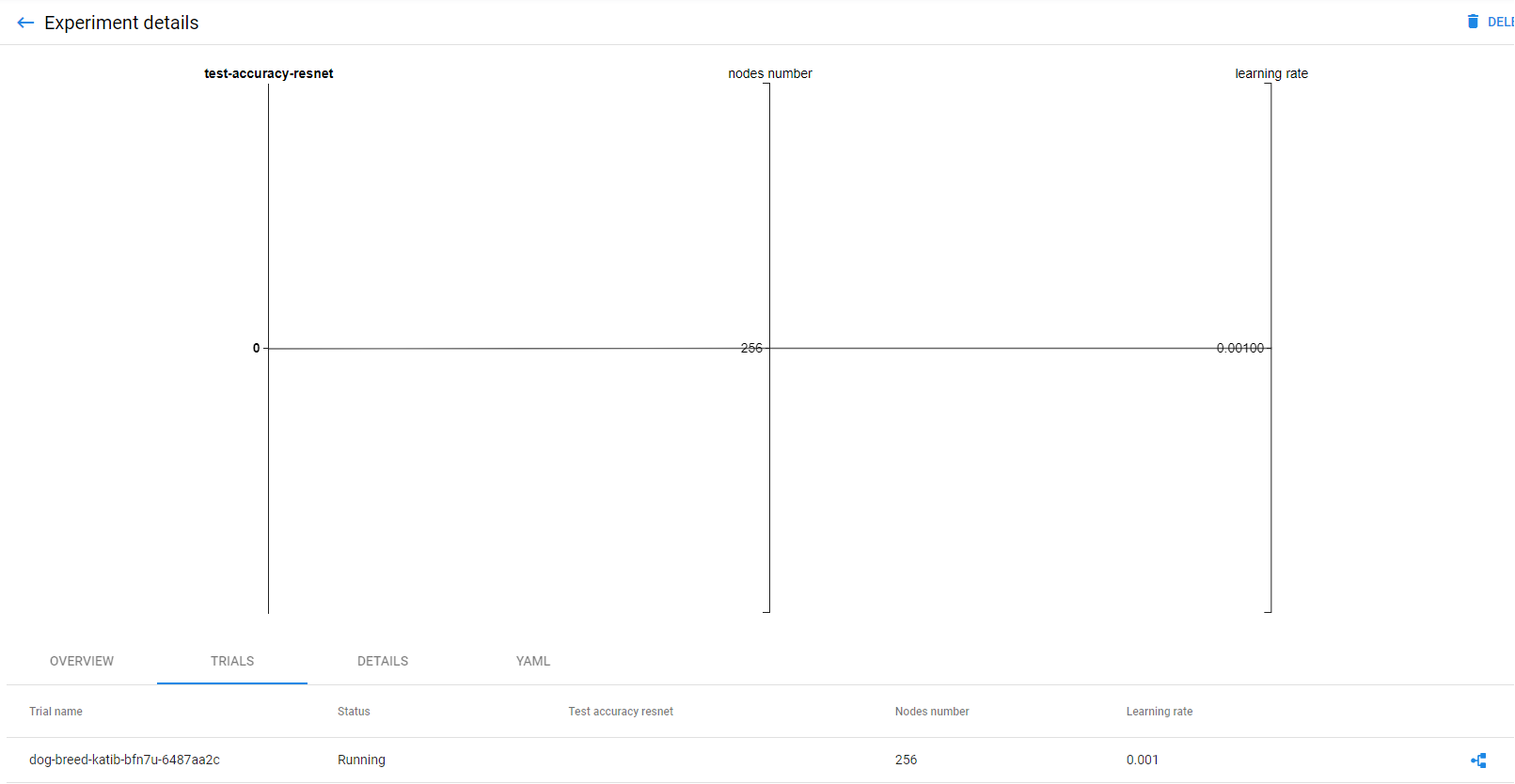

Watch the progress of the Katib experiment in real-time:

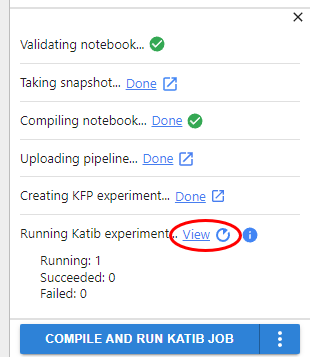

6. View the Katib experiment

Click on View to see the Katib experiment:

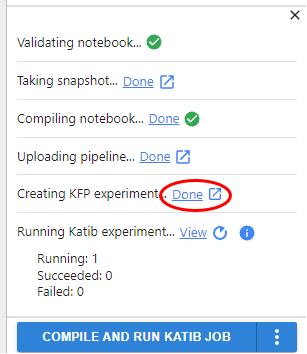

7. View the runs in KFP experiment

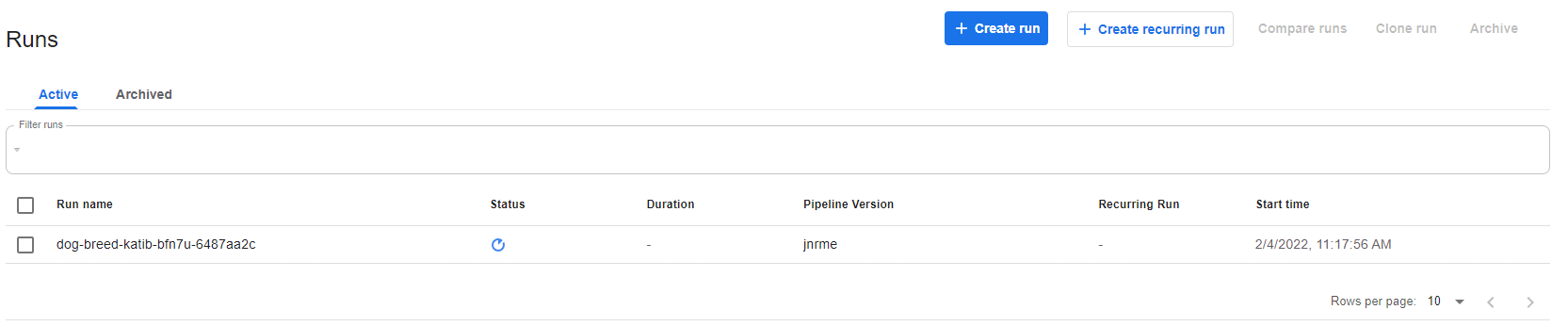

Click on Done to see the runs in the Kubeflow Pipelines (KFP) experiment:

8. View the new trials and runs

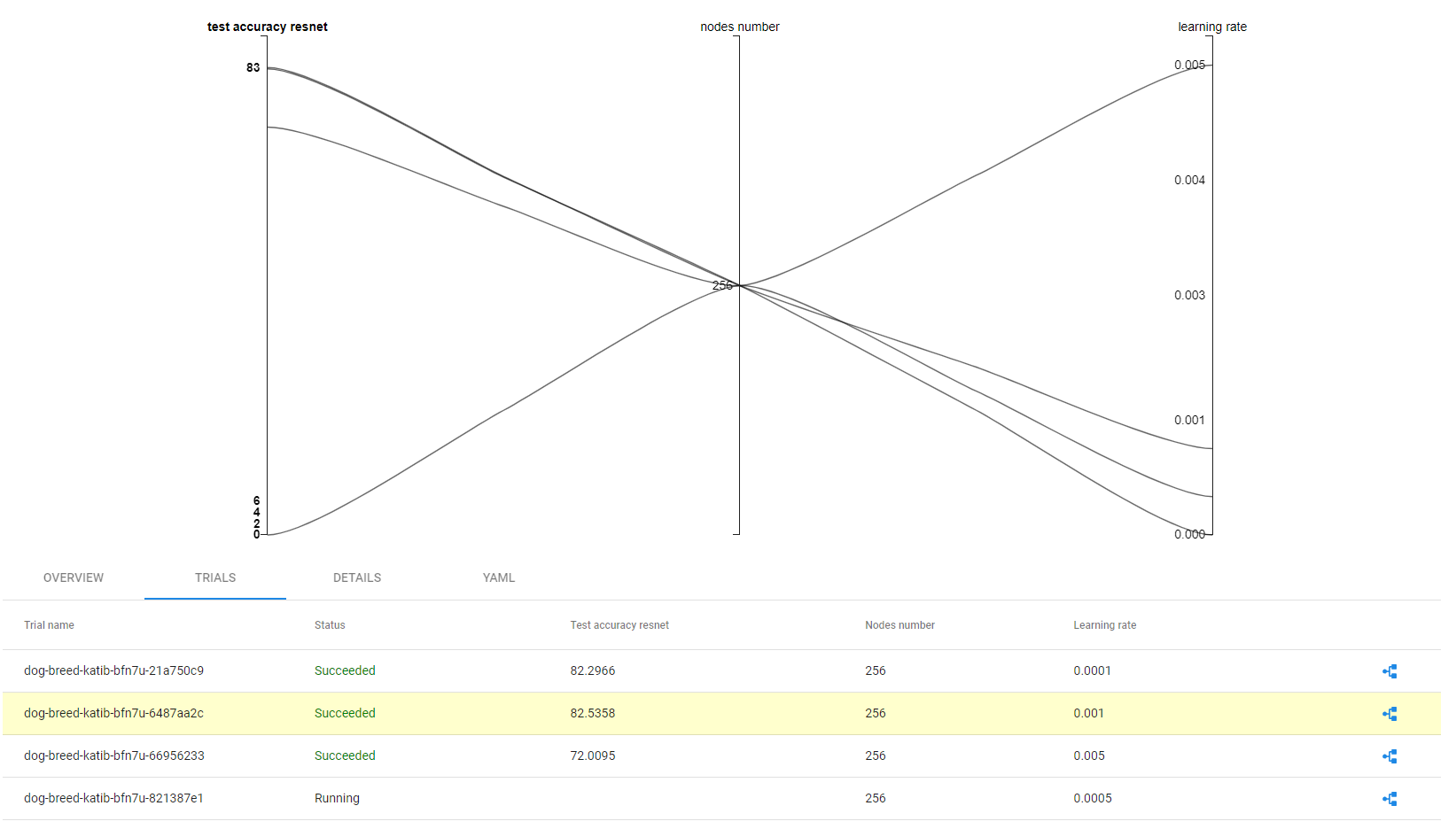

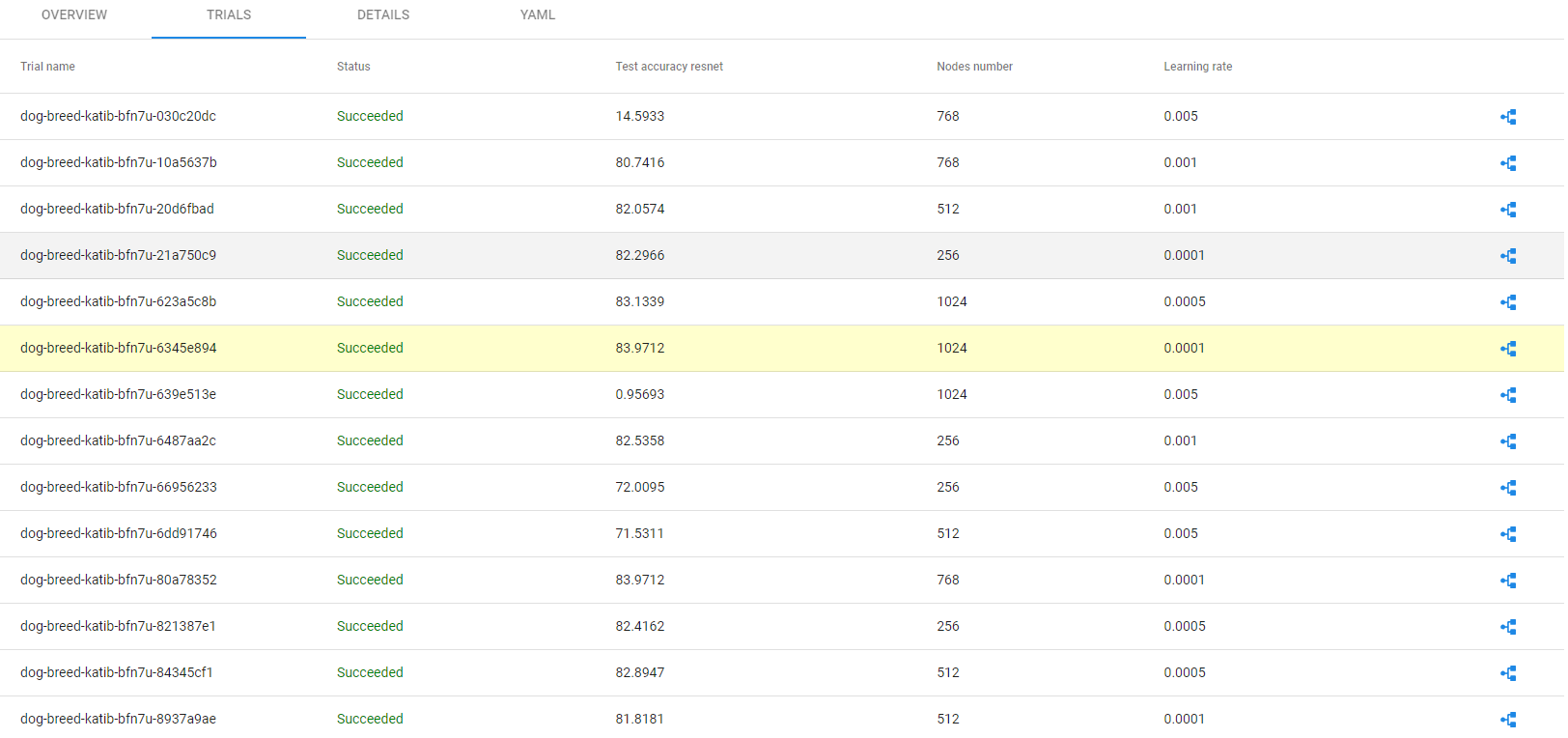

In the Katib experiment page you will see the new trials:

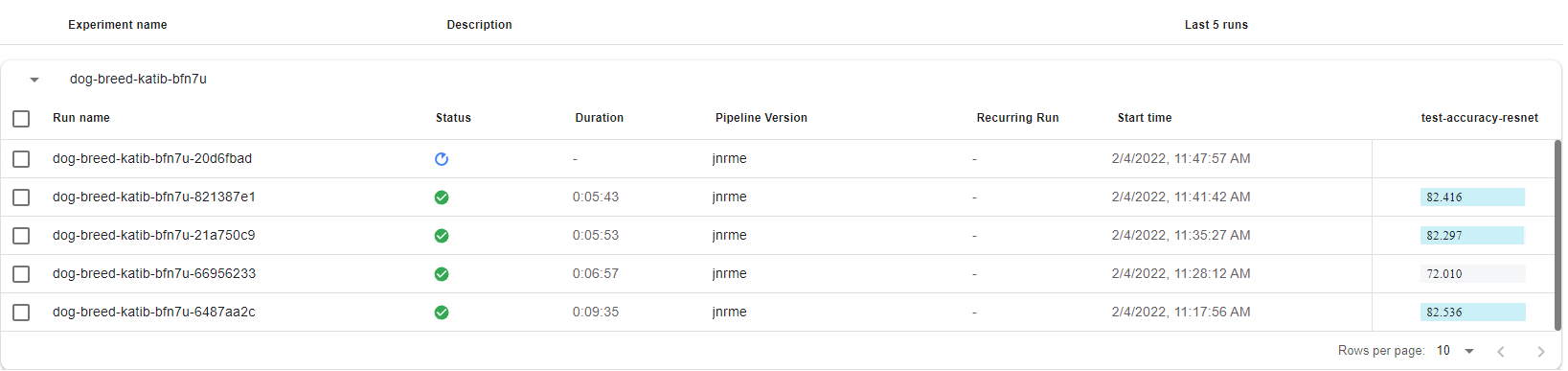

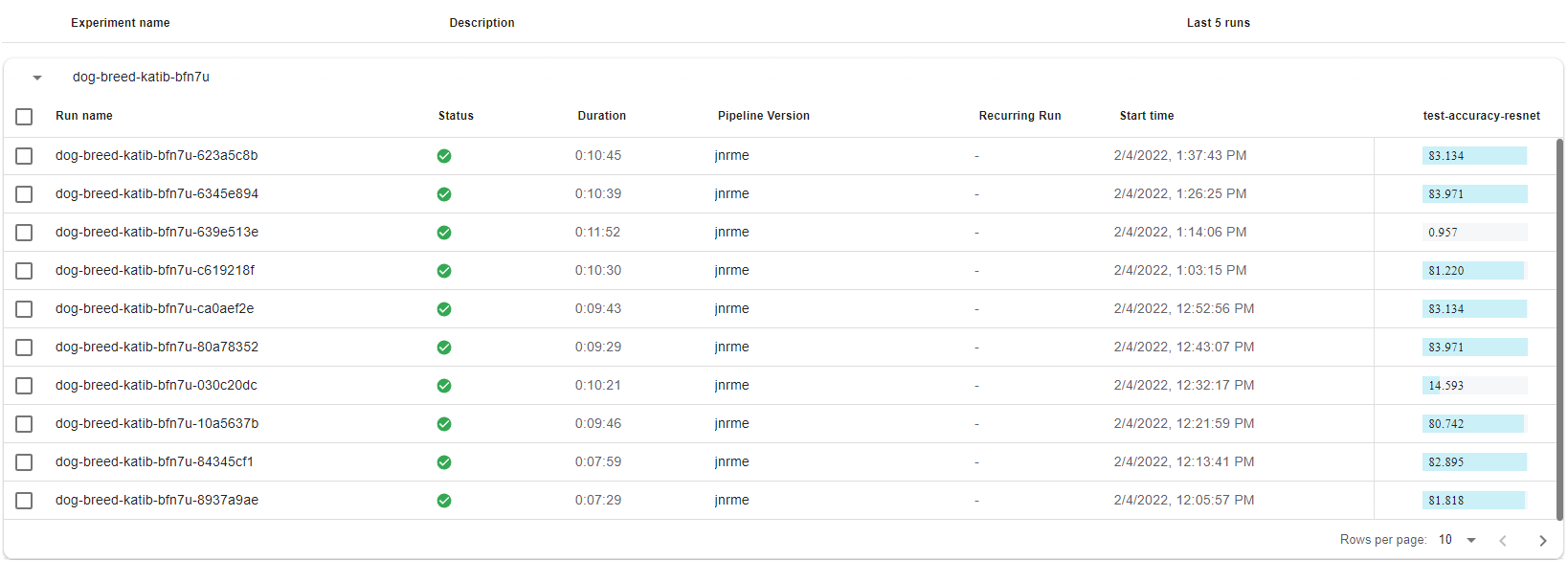

In the KFP UI you will see the new runs:

Let’s unpack what just happened. Previously, Kale produced a pipeline run from a notebook and now it is creating multiple pipeline runs, where each one is fed with a different combination of arguments.

Katib is Kubeflow’s component used to run general-purpose hyperparameter tuning jobs. Katib does not know anything about the jobs that it is actually running (called trials in the Katib jargon), all it cares about is the search space, the optimization algorithm, and the goal. Katib supports running simple Jobs (that is, Pods) as trials, but Kale implements a scheme to have the trials actually run pipelines in Kubeflow Pipelines, and then collect the metrics from the pipeline runs. This way we completely unify Katib with Kubeflow Pipelines, providing full visibility, and reproducibility for each step of the HP tuning process, via KFP.

As the Katib experiment is producing trials, you will see more trials in the Katib UI. Note that the best trial, that is the best model, is highlighted in the Katib UI:

And more runs in the KFP UI:

When the Katib experiment is completed, you can view all the trials in the Katib UI:

And all the runs in the KFP UI:

Congratulations! You have successfully run an end-to-end ML workflow all the way from a notebook to a reproducible multi-step pipeline with hyperparameter tuning, using Kubeflow as a Service, Kale, Katib, KF Pipelines, and Rok!